In the E-Discovery Bubble, we’re embroiled in a debate over “Linked Attachments.” Or should we say “Cloud Attachments,” or “Modern Attachments” or “Hyperlinked Files?” The name game aside, a linked or Cloud attachment is a file that, instead of being tucked into an email, gets uploaded to the cloud, leaving a trail in the form of a link shared in the transmitting message. It’s the digital equivalent of saying, “It’s in an Amazon locker; here’s the code” versus handing over a package directly. An “embedded attachment” travels within the email, while a “linked attachment” sits in the cloud, awaiting retrieval using the link.

Some recoil at calling these digital parcels “attachments” at all. I stick with the term because it captures the essence of the sender’s intent to pass along a file, accessible only to those with the key to retrieve it, versus merely linking to a public webpage. A file I seek to put in the hands of another via email is an “attachment,” even if it’s not an “embedment.” Oh, and Microsoft calls them “Cloud Attachments,” which is good enough for me.

Regardless of what we call them, they’re pivotal in discovery. If you’re on the requesting side, prepare for a revelation. And if you’re a producing party, the party’s over.

A Quick March Through History

Nascent email conveyed basic ASCII text but no attachments. In the early 90s, the advent of Multipurpose Internet Mail Extensions (MIME) enabled files to hitch a ride on emails via ASCII encoded in Base64. This tech pivot meant attachments could join emails as encoded stowaways, to be unveiled upon receipt.

For two decades, this embedding magic meant capturing an email also netted its attachments. But come the early 2010s, the cloud era beckoned. Files too bulky for email began diverting to cloud storage with emails containing only links or “pointers” to these linked attachments.

The Crux of the Matter

Linked attachments aren’t newcomers; they’ve been lurking for over a decade. Yet, there’s a growing “aha” moment among requesters as they realize the promised exchange of digital parcels hasn’t been as expected. Increasingly—and despite contrary representations by producing parties—relevant, responsive and non-privileged attachments to email aren’t being produced because relevant, responsive and non-privileged attachments aren’t being searched.

Wait! What? Say that again.

You heard me. As attachments shifted from being embedded to being linked, producing parties simply stopped collecting and searching those attachments.

How is that possible? Why didn’t they disclose that?

I’ll explain if you’ll indulge me in another history lesson.

Echoes From the Past

Traditionally, discovery leaned on indexing the content of email and attachments for quicker search, bypassing the need to sift through each individually. Every service provider employs indexed search.

When attachments are embedded in messages, those attachments are collected with the messages, then indexed and searched. But when those attachments are linked instead of embedded, collecting them requires an added step of downloading the linked attachments with the transmitting message. You must do this before you index and search because, if you fail to do so, the linked attachments aren’t searched or tied to the transmitting message in a so-called “family relationship.”

They aren’t searched. Not because they are immaterial or irrelevant or in any absolute sense, inaccessible; a linked attachment is as amenable to being indexed and searched as any other document. They aren’t searched because they aren’t collected; and they aren’t collected because it’s easier to blow off linked attachments than collect them.

Linked attachments, squarely under the producer’s control, pose a quandary. A link in an email is a dead-end for anyone but the sender and recipients and reveals nothing of the file’s content. These linked attachments could be brimming with relevant keywords yet remain unexplored if not collected with their emails.

So, over the course of the last decade, how many times has an opponent revealed that, despite a commitment to search a custodian’s email, they were not going to collect and search linked documents?

The curse and blessing of long experience is having seen it all before. Every generation imagines they invented sex, drugs and rock-n-roll, and every new information and communication technology is followed by what I call the “getting-away-with-murder” phase in civil discovery. Litigants claim that whatever new tech has wrought is “too hard” to deal with in discovery, and they get away with murder by not having to produce the new stuff until long after we have the means and methods to do so. I lived through that with e-mail, native production, then mobile devices, web content and now, linked attachments.

This isn’t just about technology but transparency and diligence in discovery. The reluctance to tackle linked attachments under claims of undue burden echoes past reluctances with emerging technologies. Yet, linked attachments, integral to relevance assessments, shouldn’t be sidelined.

What is the Burden, Really?

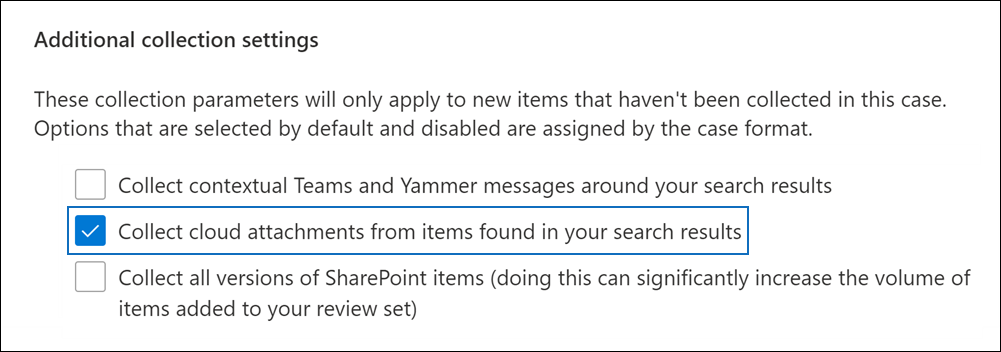

We see conclusory assertions of burden notwithstanding that the biggest platforms like Microsoft and Google offer ‘pretty good’ mechanisms to deal with linked attachments. So, if a producing party claims burden, it behooves the Court and requesting parties to inquire into the source of the messaging. When they do, judges may learn that the tools and techniques to collect linked attachments and preserve family relationships exist, but the producing party elected not to employ them. Granted, these tools aren’t perfect; but they exist, and perfect is not the standard, just as pretending there are no solutions and doing nothing is not the standard.

Claims that collecting linked attachments pose an undue burden because of increased volume are mostly nonsense. The longstanding practice has been to collect a custodian’s messages and ALL embedded attachments, then index and search them. With few exceptions, the number of items collected won’t differ materially whether the attachment is embedded or linked (although larger files tend to be linked). So, any party arguing that collecting linked attachments will require the search of many more documents than before is fibbing or out of touch. I try not to attribute to guile that which may be explained by ignorance, so let’s go with the latter.

Half Baked Solutions

Challenged for failing to search linked attachments, a responding party may protest that they searched the transmitting emails and even commit to collecting and searching linked attachments to emails containing search hits. Sounds reasonable, right? Yet, it’s not even close to reasonable. Here’s why:

When using lexical (e.g., keyword) search to identify potentially responsive e-mail “families,” the customary practice is to treat a message and its attachments as potentially responsive if either the content of the transmitting message or its attachment generates search “hits” for the keywords and queries run against them. This is sensible because transmittals often say no more than, “see attached;” it’s the attachment that holds the hits. Yet, stripped of its transmittal, you won’t know the timing or circulation of the attachment. So, we preserve and disclose email families.

But, if we rely upon the content of transmitting messages to prompt a search of linked attachments, we will miss the lion’s share of responsive evidence. If we produce responsive documents without tying them to their transmittals, we can’t tell who got what and when. All that “what did you know and when did you know it” matters.

Why Guess When You Can Measure?

Hopefully, you’re wondering how many hits suggesting relevance occur in transmittals and how many in attachments? How many occur in both? Great questions! Happily, we can measure these things. We can determine, on average, the percentage of messages that produce hits versus their attachments.

If you determine that, say, half of hits were within embedded attachments, then you can fairly attribute that character to linked attachments not being searched. In that sense, you can estimate how much you’re missing and ascertain a key component of a proper proportionality analysis.

So why don’t producing parties asserting burden supply this crucial metric?

The Path Forward

Producing parties have been getting away with murder on linked attachments for so long that they’ve come to view it as an entitlement. Linked attachments are squarely within the ambit of what must be assessed for relevance. The potential for a linked attachment to be responsive is no less than that of an item transmitted as an embedded attachment. So, let’s stop pretending they have a different character in terms of relevance and devote our energies to fixing the process.

Collecting linked attachments isn’t as Herculean as some claim, especially with tools from giants like Microsoft and Google easing the process. The challenge, then, isn’t in the tools but in the willingness to employ them.

Do linked attachments pose problems? They absolutely do! I’ve elided over ancillary issues of versioning and credentials because those concerns reside in the realm between good and perfect solutions. Collection methods must be adapted to them—with clumsy workarounds at first and seamless solutions soon enough. But in acknowledging that there are challenges, we must also acknowledge that these linked attachments have been around for years, and they are evidence. Waiting until the crisis stage to begin thinking about how to deal with them was a choice, and a poor one. I shudder to think of the responsive information ignored every single day because this issue is inadequately appreciated by counsel and courts.

Happily, this is simply a technical challenge and one starting to resolve. Speeding the race to resolution requires that courts stop giving a free pass to the practice of ignoring linked attachments. Abraham Lincoln defined a hypocrite as a “man who murdered his parents, and then pleaded for mercy on the grounds that he was an orphan.” Having created the problem and ignored it for years, it seems disingenuous to indulge requesting parties’ pleas for mercy.

In Conclusion

We’re at a crossroads, with technical solutions within reach and the legal imperative clearer than ever. It’s high time we bridge the gap between digital advancements and discovery obligations, ensuring that no piece of evidence, linked or embedded, escapes scrutiny.